[轉載] Beat Detection Using JavaScript and the Web Audio API Back

- Author: Joe Sullivan

- Origin: http://joesul.li/van/beat-detection-using-web-audio/

By Joe Sullivan

This article explores using the Web Audio API and JavaScript to accomplish beat detection in the browser. Let's define beat detection as determining (1) the location of significant drum hits within a song in order to (2) establish a tempo, in beats per minute (BPM).

If you're not familiar with using the WebAudio API and creating buffers, this tutorial will get you up to speed. We'll use Go from Grimes as our sample track for the examples below.

Presence of "peaks" in a song above 0.8, 0.9, and 0.95 respectively.

Premise (前提)

The central premise is that the peak (峰值)/tempo (節奏) data is readily available; people can, after all, very easily dance to music. Therefore, applying common sense to the data should reveal that information.

Setup

I've selected a sample song. We'll take various cracks at analyzing it and coming up with a reasonable algorithm for determining tempo, then we'll look out-of-sample to test our algorithm.

Song data

A song is a piece of music. Here we'll deal with it as an array of floats that represent the waveform of that music measured at 44,100 hz.

Getting started

I placed my initial effort at the top of the screen. What you see is a chart of all the floats in the buffer (again, representing the song) above a certain threshold, mapped to their position within the song. There does appear to be some regularity to the peaks, but their distribution is hardly uniform within any threshold.

If we can find a way to graph the song with a very uniform distribution of peaks, it should be simple to determine tempo. Therefore I'll try to manipulate the audio buffer in order to achieve that type of distribution. This is where the Web Audio API comes in: I can use it to transform the audio in a relatively intuitive (直觀的) way.

// Function to identify peaks

function getPeaksAtThreshold(data, threshold) {

var peaksArray = [];

var length = data.length;

for(var i = 0; i < length;) {

if (data[i] > threshold) {

peaksArray.push(i);

// Skip forward ~ 1/4s to get past this peak.

i += 10000;

}

i++;

}

return peaksArray;

}

Filtering

How simple is it to transform our song with the Web Audio API? Here's an example that passes the song through a low-pass filter and returns it.

// Create offline context

var offlineContext = new OfflineAudioContext(1, buffer.length, buffer.sampleRate);

// Create buffer source

var source = offlineContext.createBufferSource();

source.buffer = buffer;

// Create filter

var filter = offlineContext.createBiquadFilter();

filter.type = "lowpass";

// Pipe the song into the filter, and the filter into the offline context

source.connect(filter);

filter.connect(offlineContext.destination);

// Schedule the song to start playing at time:0

source.start(0);

// Render the song

offlineContext.startRendering()

// Act on the result

offlineContext.oncomplete = function(e) {

// Filtered buffer!

var filteredBuffer = e.renderedBuffer;

};

Now I'll run a low-, mid-, and high- pass filter over the song and graph the peaks measured from them. Because certain instruments are more faithful representatives of tempo (kick drum (大鼓), snare drum (小鼓), claps), I'm hoping that these filters will draw them out more so that "song peaks" will turn into "instrument peaks", or instrument hits.

Peaks with a lowpass, highpass, and bandpass filter, respectively.

Each of these graphs have some pattern to them; hopefully, we're now looking at the pattern of individual instruments. The low-pass graph, in fact, is looking particularly good. I'd venture to guess that each peak we see on the low-pass graph represents a kick drum hit.

At this point it might be a good idea to take a look at what the data should look like, so we can start evaluating our method visually.

The actual tempo of the song is around 140 beats per minute. Let's take a look at that by drawing a line for each measure, then our lowpass filter again:

Each measure of the song is represented by a single line (top); Lowpass peaks as seen before (bottom)

We seem to have a problem in that much of the song, especially the beginning and the end, is not being picked up in the peaks. Of course, this is because the beginning and end of the song are quieter, i.e. have less peaks, than the rest of the song.

The volume of the song at a given measure.

Low-pass peaks, with the height of each bar representing the track volume at that point.

Let's try something...

I think that the lowpass graph is tracking the drum rhythm well enough that we can now measure the length of a drum pattern and jump from there to tempo. But how to measure the length of a pattern?

Let's draw a histogram listing the average interval of time between peaks:

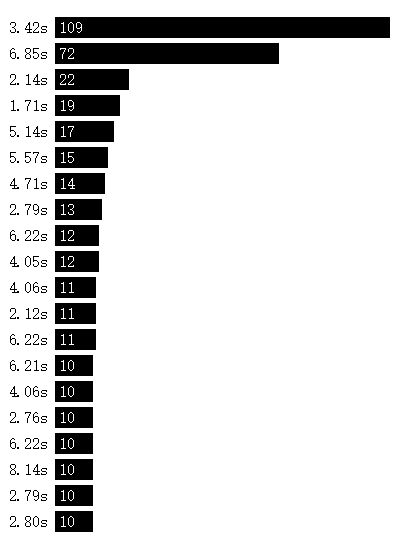

Average interval between peaks in a song. This measurement is not just of the closest neighbor but of all neighbors in the vicinity of a given peak.

This is very cool. I didn't expect to see a strong mode emerge, but with 3.42 and 3.42*2 taking first and second place, I think we can make one conclusion: 3.42s is a very important interval in this song!

Just how does 3.42s relate to the tempo? Might it be the length of a measure? Well, a song with measures of length 3.42s would have beats of length 0.855s, of which you can fit 70.175 into a minute. In other words: measure length 3.42s maps to 70BPM.

Of course, the magic number we're looking for is double that: 140. If you double the tempo, you halve the measure length, so the interval we're looking for is 1.72s, and as you can see we measured that duration 19 times. Isn't that nice?

// Function used to return a histogram of peak intervals

function countIntervalsBetweenNearbyPeaks(peaks) {

var intervalCounts = [];

peaks.forEach(function(peak, index) {

for(var i = 0; i < 10; i++) {

var interval = peaks[index + i] - peak;

var foundInterval = intervalCounts.some(function(intervalCount) {

if (intervalCount.interval === interval)

return intervalCount.count++;

});

if (!foundInterval) {

intervalCounts.push({

interval: interval,

count: 1

});

}

}

});

return intervalCounts;

}

Let's draw the histogram again, this time grouping by target tempo. Because most dance music is between 90BPM and 180BPM (actually, most dance music is between 120-140BPM), I'll just assume that any interval that suggests a tempo outside of that range is actually representing multiple measures or some other power of 2 of the tempo. So: an interval suggesting a BPM of 70 will be interpreted as 140. (This logical step is probably a good example of why out-of-sample testing is important.)

Frequency of intervals grouped by the tempo they suggest.

It's quite clear which candidate is a winner: nearly 10x more intervals point to 140BPM than to its nearest competitor.

// Function used to return a histogram of tempo candidates.

function groupNeighborsByTempo(intervalCounts) {

var tempoCounts = []

intervalCounts.forEach(function(intervalCount, i) {

// Convert an interval to tempo

var theoreticalTempo = 60 / (intervalCount.interval / 44100 );

// Adjust the tempo to fit within the 90-180 BPM range

while (theoreticalTempo < 90) theoreticalTempo *= 2;

while (theoreticalTempo > 180) theoreticalTempo /= 2;

var foundTempo = tempoCounts.some(function(tempoCount) {

if (tempoCount.tempo === theoreticalTempo)

return tempoCount.count += intervalCount.count;

});

if (!foundTempo) {

tempoCounts.push({

tempo: theoreticalTempo,

count: intervalCount.count

});

}

});

}

Review

As far as I'm concerned, we've now established the tempo of this song. Let's go back over our algorithm before doing some out-of-sample testing:

- Run the song through a low-pass filter to isolate the kick drum

- Identify peaks in the song, which we can interpret as drum hits

- Create an array composed of the most common intervals between drum hits

- Group the count of those intervals by tempos they might represent.

- We assume that any interval is some power-of-two of the length of a measure

- We assume the tempo is between 90-180BPM

- Select the tempo that the highest number of intervals point to

Testing

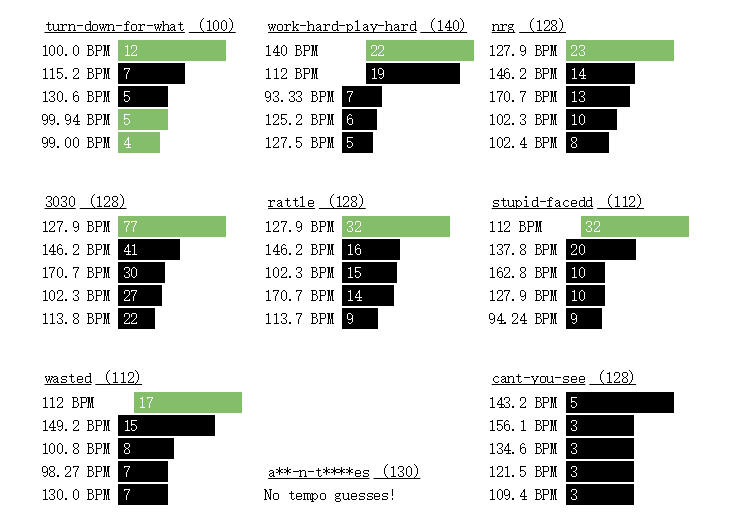

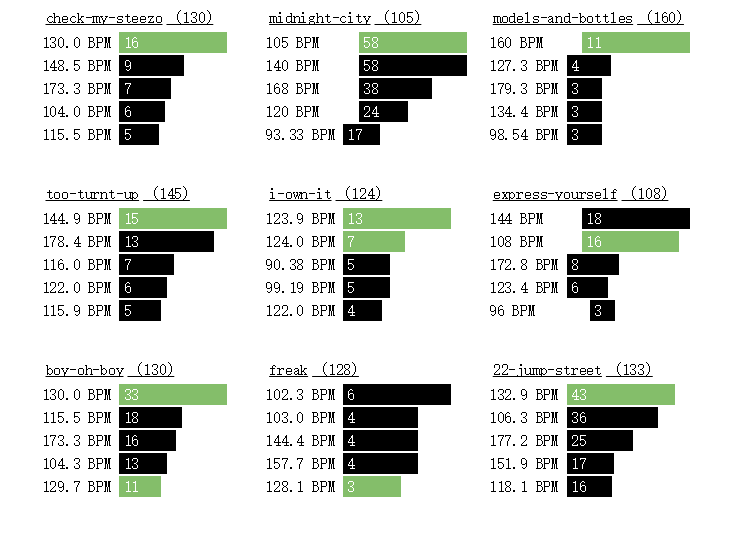

Let's now test it! I'm using for a dataset the 22 Jump Street soundtrack because it's full of contemporary dance music. I pulled down the 30-second iTunes previews, then grabbed the actual tempos from Beatport. Below are the top five guesses for each song. I've highlighted in green where a guess matches the actual tempo.

Well, that's pretty reassuring! The algorithm accurately predicts tempo 14 times out of 18 tracks, or 78% of the time.

Furthermore, it looks like there's a strong correlation between # of peaks measured and guess accuracy: there's no instance in which it makes more than three observations of the correct tempo where it doesn't place the correct tempo in the top two guesses. That suggests to me that (1) our assumptions about the relationship between neighboring peaks and tempo are correct and (2) by tweaking the algorithm to surface more peaks we can increase its accuracy.

There's an interesting pattern present in wasted, midnight-city, and express-yourself: the two top tempo candidates are related by a ratio of 4/3, and the lower of the two is correct. In midnight-city, the two are tied, whereas in express-yourself the higher of the two knocks out the actual tempo for the top spot. Listening to each sample, they all have triplets in the drum section, which explains the presence of the higher ratio. The algorithm should be tweaked to identify this sympathetic tempos and fold their results into the lower of the two.

However, this article has exhausted its scope. The bottom line is: you can do pretty good beat detection using JavaScript, the Web Audio API, and a couple of rules of thumb.